Evaluating Text Precision in AI-Generated Images: A Comparison of DALL-E 3 and Mistral

This post evaluates the ability of DALL-E 3 and Mistral to generate images containing precise text, words, and formatting exactly as given in the prompts, with OCR used for verification via GPT-4o.

8 min read

Introduction

Text precision in AI-generated images is a critical factor for applications requiring accurate and literal representation of input prompts. This evaluation aims to compare the capabilities of Mistral and DALL-E 3 in generating images that faithfully reproduce specified text. Applications such as presentations, educational materials, and marketing slides often require precise text representation within visuals, making this evaluation crucial. The goal is to determine which model performs better in terms of textual accuracy, clarity, and overall adherence to the given prompts, using OCR (Optical Character Recognition) with GPT-4o for verification.

Evaluation Methodology

This post evaluates the performance of two models, DALL-E 3 and Mistral, in generating an image containing exact text as specified in a given prompt. To assess the results, I used OCR (Optical Character Recognition) capabilities provided by GPT-4o to extract and compare the generated text.

The evaluation follows these steps:

- Prompt Consistency: The same prompt is given to both models with instructions to generate an image with an exact list of words.

- Prompt Variation: Three different prompts are used with the same instructions but different lists of words.

- Generate images using:

- DALL-E 3 via the OpenAI API with a Python script.

- Mistral Chat via its web-based chat interface at chat.mistral.ai.

- Extract text from the generated images using:

- GPT-4o via a Python script for OCR using the OpenAI API. Note: Using the GPT API requires an active OpenAI API key, configured in the script for authentication and processing requests. This applies to steps 3 and 4.

Image Generation and Results

Prompt 1: Large Language Models (LLMs)

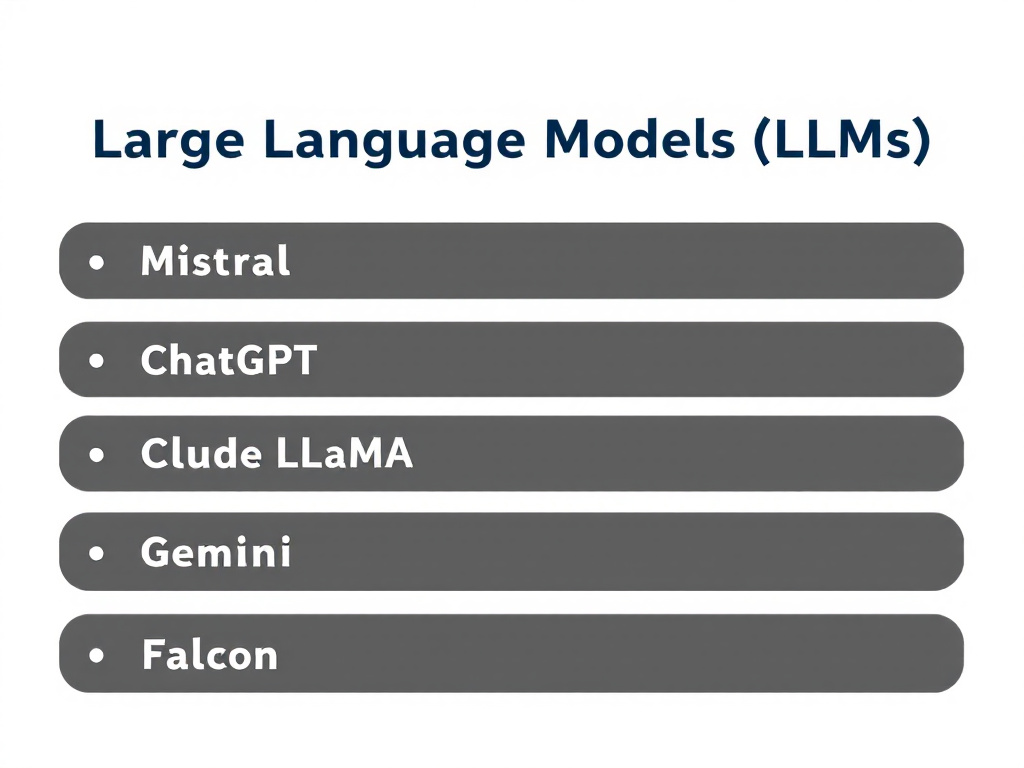

"A clean and professional presentation slide design with the title 'Large Language Models (LLMs)' at the top center. Below, list exactly these and only these names of LLMs as bullet points: 'Mistral,' 'ChatGPT,' 'Claude,' 'LLaMA,' 'Gemini,' and 'Falcon.' Use a plain white background with simple black text to ensure clarity, and no other text or decorative elements."

Figure 1: Image generated by DALL-E 3 based on the prompt for Large Language Models (LLMs).

Figure 1: Image generated by DALL-E 3 based on the prompt for Large Language Models (LLMs).

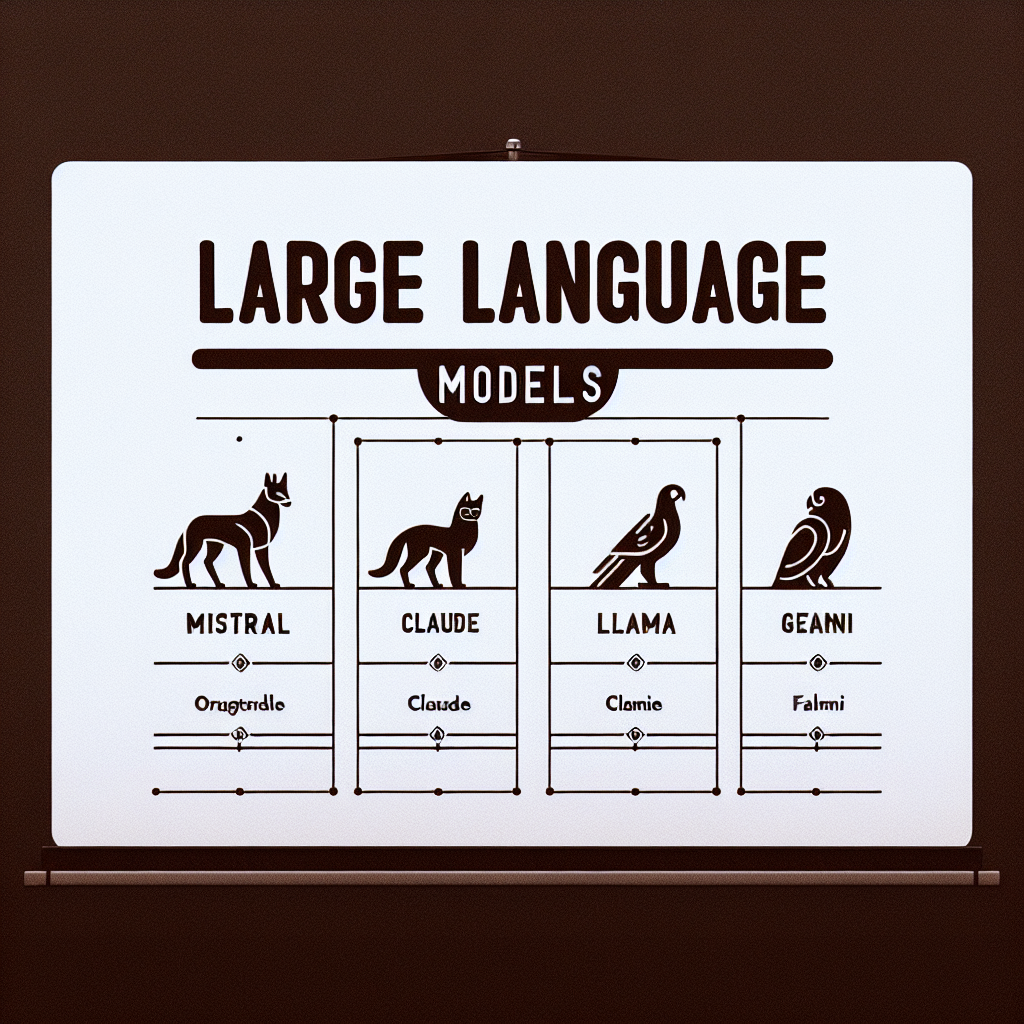

Figure 2: Image generated by Mistral using the prompt for Large Language Models (LLMs).

Figure 2: Image generated by Mistral using the prompt for Large Language Models (LLMs).

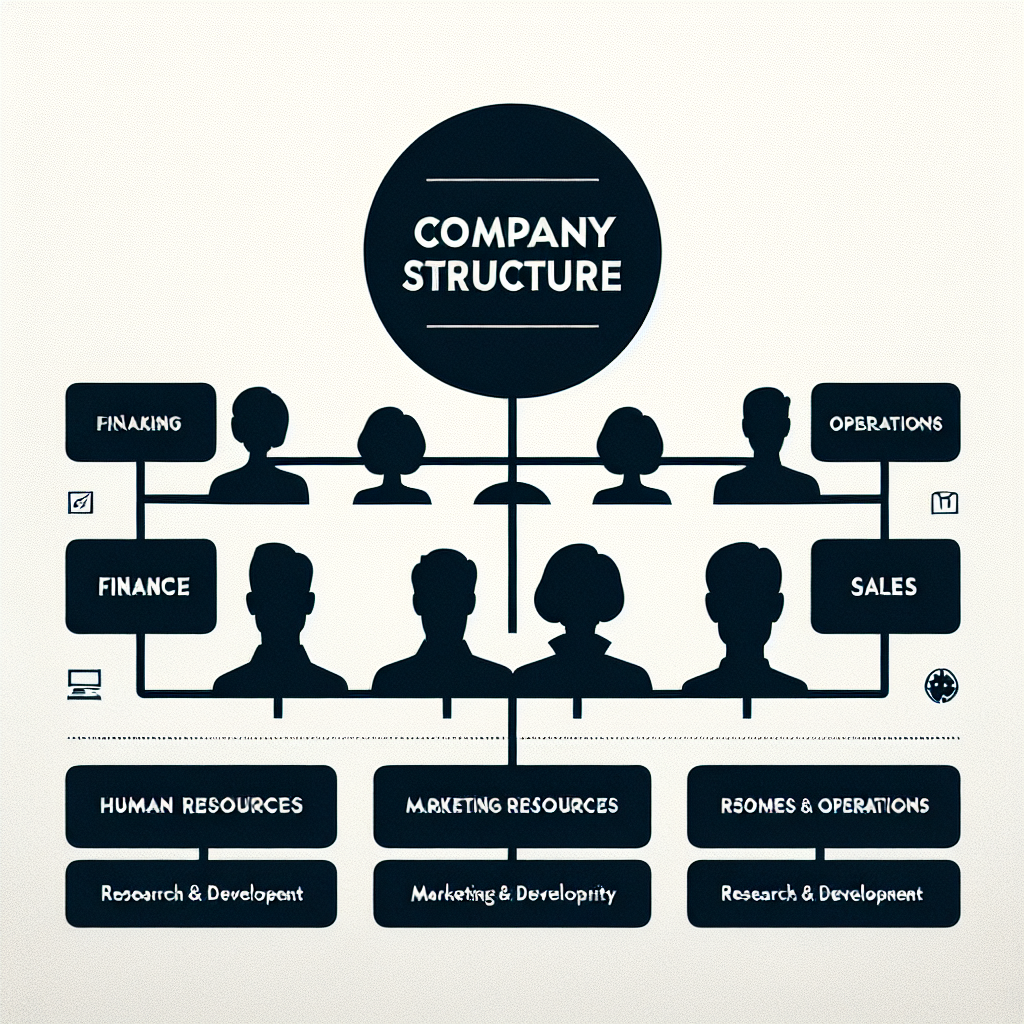

Prompt 2: Company Structure

"A clean and professional presentation slide design with the title 'Company Structure' at the top center. Below, list exactly these and only these department names as bullet points: 'Human Resources,' 'Finance,' 'Marketing,' 'Sales,' 'Operations,' and 'Research & Development.' Use a plain white background with simple black text to ensure clarity, and no other text or decorative elements."

Figure 3: Image generated by DALL-E 3 based on the prompt for Company Structure.

Figure 3: Image generated by DALL-E 3 based on the prompt for Company Structure.

Figure 4: Image generated by Mistral using the prompt for Company Structure.

Figure 4: Image generated by Mistral using the prompt for Company Structure.

Prompt 3: University Departments

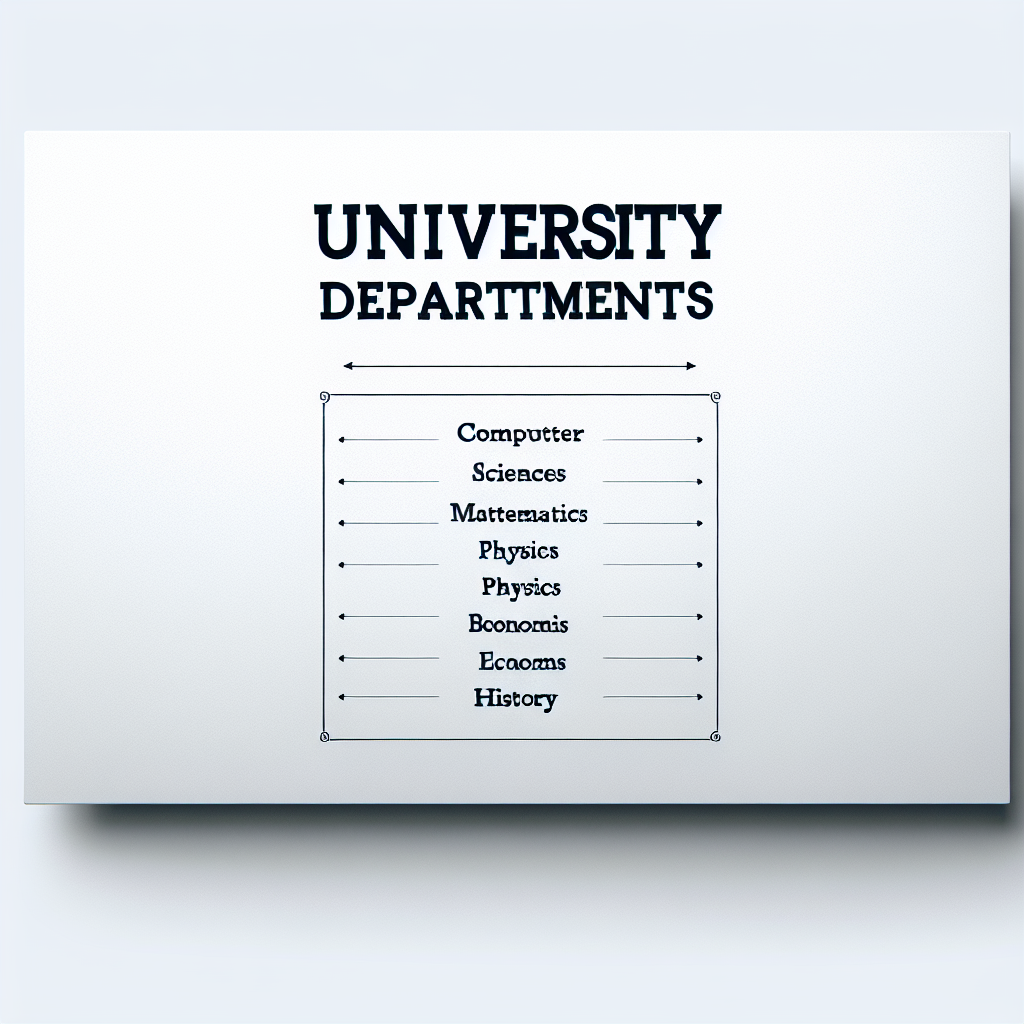

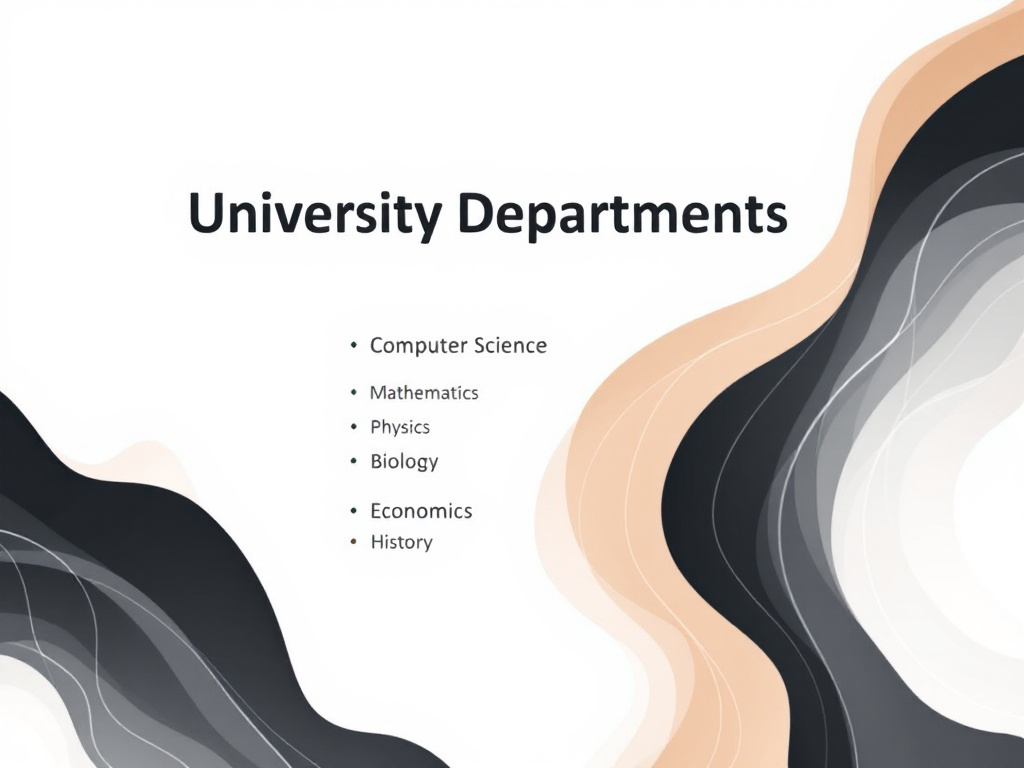

"A clean and professional presentation slide design with the title 'University Departments' at the top center. Below, list exactly these and only these university departments as bullet points: 'Computer Science,' 'Mathematics,' 'Physics,' 'Biology,' 'Economics,' and 'History.' Use a plain white background with simple black text to ensure clarity, and no other text or decorative elements."

Figure 5: Image generated by DALL-E 3 based on the prompt for University Departments.

Figure 5: Image generated by DALL-E 3 based on the prompt for University Departments.

Figure 6: Image generated by Mistral using the prompt for University Departments.

Figure 6: Image generated by Mistral using the prompt for University Departments.

Results

The following are the OCR results obtained using GPT-4o:

Prompt 1

| Model | Extracted Text |

|---|---|

| DALL-E 3 | LARGE LANGUAGE MODELS, MISTRAL, CLAUDE, LLAMA, GEANI, Oragrtrdle, Claude, Clamie, Falmi |

| Mistral | Large Language Models (LLMs), Mistral, ChatGPT, Clude LLaMA, Gemini, Falcon |

Prompt 2

| Model | Extracted Text |

|---|---|

| DALL-E 3 | COMPANY STRUCTURE, FINANCING, OPERATIONS, FINANCE, SALES, HUMAN RESOURCES, MARKETING RESOURCES, RSOMES & OPERATIONS, Research & Development, Marketing & Developity, Research & Development |

| Mistral | Company Structure, Human Resources, Marketing, Sales, Operations, Research & Development |

Prompt 3

| Model | Extracted Text |

|---|---|

| DALL-E 3 | UNIVERSITY DEPARTMENTS, Computter, Sciences, Matematics, Physics, Physisc, Bconomis, Ecoooms, History |

| Mistral | University Departments, Computer Science, Mathematics, Physics, Biology, Economics, History |

Conclusion

This evaluation highlights the strengths and weaknesses of DALL-E 3 and Mistral in generating precise text within images. Key findings are as follows:

- Mistral demonstrates greater textual accuracy and adherence to prompts compared to DALL-E 3, which often introduced errors or inconsistencies in the generated text. Changes to the prompt may improve DALL-E 3's results; however, further exploration would be needed to validate this, which was beyond the scope of this evaluation.

- Using the OpenAI API for DALL-E 3 was straightforward.

- OCR via the GPT-4o using the OpenAI API performed perfectly, accurately extracting text from the generated images, even for complex cases, making it a reliable evaluation tool.

In an upcoming post, I will share the Python scripts used for both image generation and OCR, providing insights into how these tools can be implemented effectively in similar evaluations.

Enjoyed this post? Found it helpful? Feel free to leave a comment below to share your thoughts or ask questions. A GitHub account is required to join the discussion.